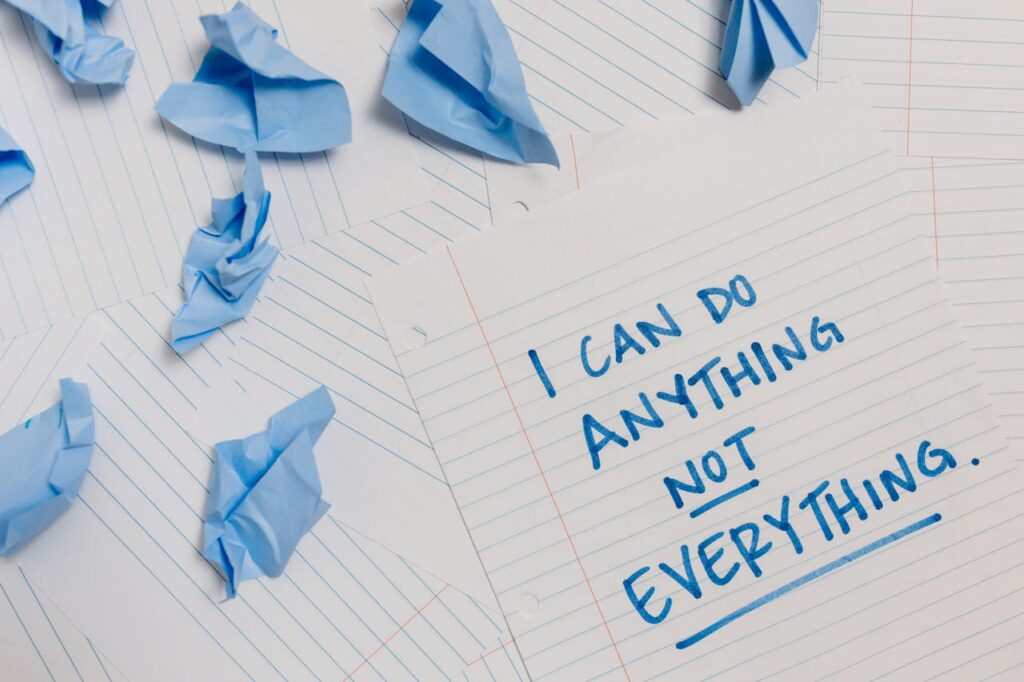

I have heard some say that their aim is for 100% test automation. But 100% test automation isn’t practical or desirable. In fact, I would argue that—for practical and desirable risk mitigation—100% test automation isn’t even possible.

Sure, if testing is just a tick-box exercise, and you don’t care about the quality of your solution, then just run an automated test set. But if you genuinely want to test your solution, you’ll need your testers to get their hands dirty.

Despite this, I still hear from companies that want to automate their entire functional test set, not understanding the valuable role of manual testing or basic economic principles like diminishing returns…

The Law of Diminishing Returns in Testing

The law of diminishing returns states that beyond a certain point, additional effort or resources invested in a process produce progressively smaller gains. In software testing:

- Early automation efforts (e.g. essential and regularly executed tests) deliver high value by catching critical bugs and reducing repetitive work.

- Later efforts (e.g. automating edge cases or cosmetic UI checks) yield diminishing returns, as the cost of writing and maintaining these tests outweighs their benefits.

Which Tests Are Worth Automating?

You see, not all tests make good automation candidates. Yes, AI has made test automation more efficient than ever, but adding a new automated test still requires setup effort. It must be scripted (ideally adapted from a manual test), QA’d, and multiple datasets will probably need to be sourced and prepared.

The following checklist will help you determine which manual functional test cases to automate:

- Does it have a high execution frequency?

- Is time consuming?

- Is it complex?

- Is it business-critical functionality?

- Is the feature you’re testing stable?

- Is automation technically feasible?

- Does it run across multiple device/OS combinations?

The more of these that your test checks off, the more appropriate it is to automate. If it doesn’t tick any, or even if it doesn’t tick the first one, then you should probably avoid it.

By following these core principles, you will have efficient coverage and a positive return on investment—that is, if you select a decent testing tool such as OpenText Functional Testing (previously known as UFT One).

The Irreplaceable Value of Manual Testing

As a result, you will need to do some manual testing, but that’s ok. Better than ok even, it’s wholly desirable and the most effective way to tackle certain test cases.

Yes, automation excels in repetitive tasks and regression checks, but—at least for now—certain types of testing remain the domain of human testers. Understanding which ones and why helps inform a balanced approach to testing and high software quality.

Exploratory Testing: The Human Edge

From a purely functional testing perspective, exploratory testing is the most blatant type of testing that should not/cannot be automated. It’s a technique that relies on testers simultaneously learning, designing, and executing tests, and showcases the unique strengths of manual testing:

- Intuition and Creativity: Human testers can follow hunches, explore unexpected paths, and devise tests on the fly based on their understanding of user behaviour and system quirks. This adaptability is crucial for uncovering edge cases that automated scripts might miss.

- Context-Aware Testing: Testers can consider the broader context of how a feature fits into the overall user experience, something automated tests struggle to replicate. For example, a human tester might notice that while a feature works as specified, it feels clunky or out of place in the larger workflow.

- Evolving Test Scenarios: As testers interact with the system, they can modify their approach based on what they discover. This dynamic process allows the identification of issues that weren’t anticipated during test planning.

Other Areas Where Manual Testing Shines

- Usability Testing: Evaluating the ease of use, intuitiveness, and overall user experience requires human judgment. Automated tools can’t assess whether an interface feels right or if a workflow frustrates users.

- Accessibility Testing: Similarly, while some accessibility checks can be automated, many aspects require human evaluation. Testers can better assess whether assistive technologies work as intended and if the overall experience is genuinely accessible.

- Ad-Hoc Testing: The most critical bugs are sometimes found through unstructured, spontaneous testing sessions. This approach allows testers to think outside the box and test scenarios that weren’t initially considered.

- Visual and Aesthetic Evaluation: Automated tools can check for basic layout issues, but they can’t judge a user interface’s aesthetic appeal or brand consistency. Human testers are essential for ensuring the visual quality of software.

- Plus any tests that don’t satisfy the checklist above.

Effective Testing Needs a Synergy of Manual and Automated Testing

The most effective testing strategies leverage both automated and manual approaches.

Automated testing handles repetitive, high-volume tests, freeing up human testers for more nuanced work. Manual testing focuses on areas requiring judgment, creativity, and adaptability.

By recognising the unique strengths of automated and manual testing, teams can create a comprehensive testing strategy that maximises efficiency while ensuring thorough coverage of all aspects of software quality.

If you want to get really clever, you could also implement a further level of hybridisation by using automation to support exploratory testing, such as generating test data or setting up complex scenarios for manual exploration.

Enabling Balanced Testing Strategies with OpenText’s Integrated Toolset

To achieve the optimal balance of manual and automated testing outlined in this article, teams need tools that seamlessly support both approaches. OpenText’s testing solutions provide an integrated ecosystem for effectively implementing this strategy:

- OpenText Functional Testing (previously known as UFT One) for automation

- OpenText Sprinter for manual testing

- OpenText Application Quality Management (previously known as ALM QC) for waterfall test execution and test management

- OpenText Software Delivery Management (previously ValueEdge Quality/ALM Octane) for Agile and DevOps test execution and test management

This ecosystem enables your teams to automate tests when they meet checklist criteria while preserving human testing for domains like accessibility validation or edge-case exploration.